6D Assembly Pose Estimation by Point Cloud Registration for Robot Manipulation

Kulunu Samarawickrama, Gaurang Sharma, Alexandre Angleraud and Roel Pieters

Abstract

The demands on robotic manipulation skills to perform challenging tasks have drastically increased in recent times. To perform these tasks with dexterity, robots require perception tools which understands the scene and extract useful information that transforms to controller inputs. To this end, modern research has introduced various object pose estimation and grasp pose detection methods that yield precise results. Assembly pose estimation is a secondary yet highly desirable skill in robotic assembling since it requires specific information on object placement unlike bin picking and pick and place tasks. However, it has been often overlooked in the research due to complexity in integrating to an agile framework. To address this issue, we propose a simple two step assembly pose estimation method with RGB-D input and 3D CAD models of the associated objects. The framework consists of semantic segmentation of the scene and registering point clouds of local surfaces against target point clouds derived from CAD models in order to detect 6D poses. We show that our method can deliver sufficient accuracy for assembling object assemblies using evaluation metrics and demonstrations.

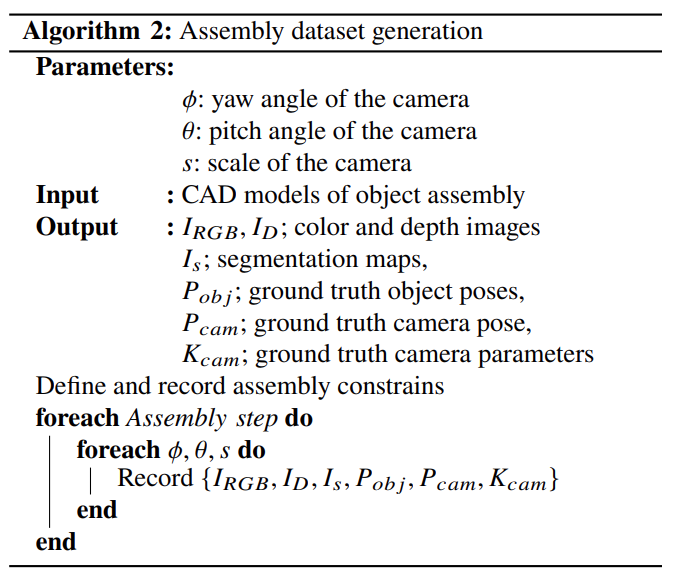

Synthetic Dataset for Object Assemblies

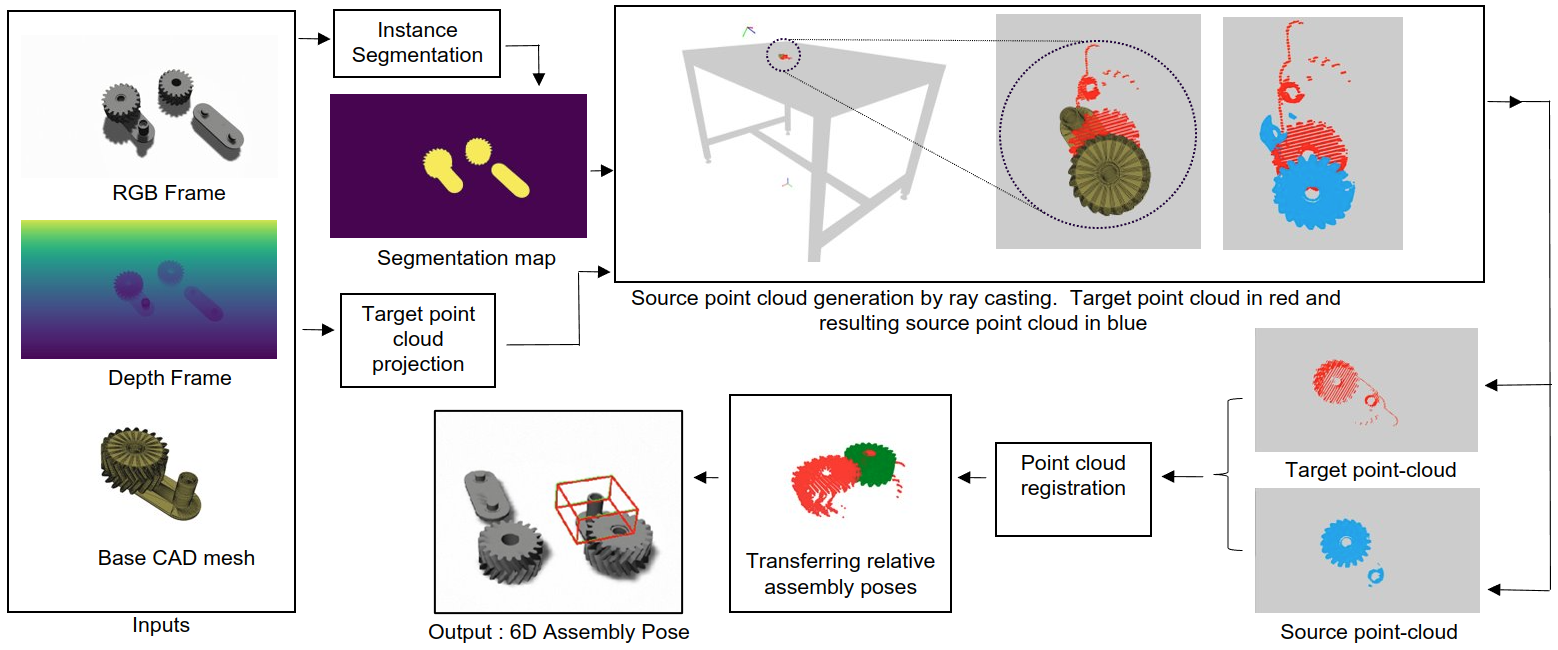

Proposed 6D assembly pose estimation method with (A) RGBD Input, (B) Semantic Segmentation module, (C) Target & Source point cloud projection, (D) Point cloud registration, and (E) Local pose transformation

Description for the second figure goes here.

6D Assembly Pose Estimation

roposed 6D assembly pose estimation method with (A) RGBD Input, (B) Sementic Segmentation module, (C)Target & Source point cloud projection, (D) Point cloud registration and (E) Local pose transformation